Eve here. Linked to an Apple Scientists paper. This has been a rather devastating blow to the idea that a large-scale language model like ChatGpt would be considered to be engaged in “thinking.” Tom Neuburger offers the following long forms of treatment: To be clear, Bese’s discovery does not require that conquering the OLE class of AI cannot engage in the process of filling the bar for “inference”. But big money is chasing “all trading tools, no master” LLMS.

Thomas Neuberger. Originally published on God’s Spies

AI cannot solve problems that have not been solved before by humans.

“Arnault Bertrand”

There’s a lot to say about AI, but there’s very little to no bottom. Consider my last words on the subject. (We’ll talk about misuse by national security states later.)

Monster AI

AI only causes harm. As I said earlier, AI is not just a disaster to our political health, but yes, that’s it (look for the Kadwarah line “Building to the surveillance state of Techno authors”). However, AI is also a climate disaster. As usage grows, it will quickly collapse over decades.

(Please refer to the video above for information on why AI models are large-scale energy pigs. Please refer to this video and award the “neural network” itself.)

Why does AI not stop? Because AI racing is not actually a technology racing. It’s a greedy race for money and a lot of it. Our lives are already run by Thate, who wants money. Especially, it’s already too many. They now find a way to feed themselves faster. By persuading Pele to do a simple search with AI, death machine that foulsifies gas.

For both these reasons – mass surveillance and climate disasters – will not benefit from AI. Not 1 ounce.

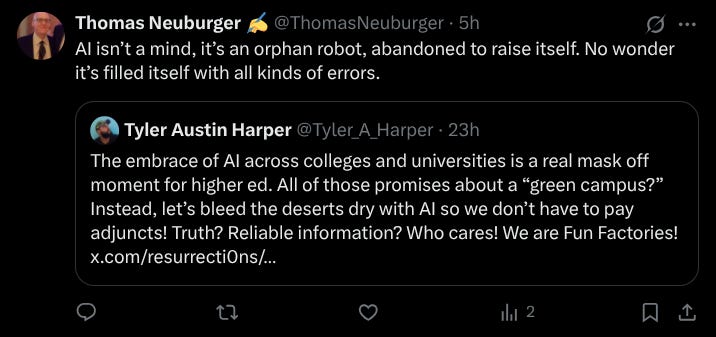

Orphan robots have abandoned raising itself

Why does AI continue to make mistakes? Below is one answer.

I’m not thinking about AI. It defeats the other Instáds. Read the full explanation.

Arnaud Bertrand of AI

Arnaud Bertrand has the best explanation for what AI is at its heart. It is not a thinking machine, and its output is not thought. But that’s the opposite – that’s what you get from freshmen you haven’t studied, but you’ve got the FES Words Institute in place and use them to make them sound smart. If the student is successful, you don’t think about it, just a good emulation.

Bertrand has posted the following text on Twitter, so I will print it out in full. The expanded version is his Sacak Site’s Paid Post Office. Conclusion: He’s exactly right. (In the title below, AGI means artificial general information, the next step up from AI.)

Apple killed the myth of AGI

The hidden costs of humanity’s most expensive delusions

By Arnaud Bertrand

About two months ago, I was having a discussion on Twitter.

Fast forward to today, the argument has now been settled on the author: I was right, yeah! 🎉

Why? Resolved beyond Apple, Spee (https://ml-site.cdn-apple.com/papers/the-illusion-of-thinking.pdf).

Is there a reason for the “inference” model?

Can they solve the problems that Ben is not training? no.

What do you say about the paper? That’s exactly what I was claiming. AI models are essentially just very talented parrots with no current inference ability, even the most cutting-edge, large-scale inference models (LRMs).

At the very least, they are not “intelligent” in surlitest, if they understand intelligence as an uneven problem-solving rather than simply parroting what was said inclusively.

That’s exactly what Apple Paper was trying to understand. Can I “infer” the final reason for the model? They can solve untrained problems, but can they usually be easily solved with “knowledge”? The answer is “No.”

An individually awful example from paper was the puzzle that crossed this river. Imagine that three people and their three agents must cross the river using a small boat that can only carry two people at a time. catch? No one is left with the agent of Subsone Else, and the boat cannot be empty – Subone always rows it back.

This is a type of logic puzzle you might find in the Children Brain Teaser Book. Find the right series of trips for everyone to cross the river. Only the solution requires 11 steps.

This simple brain teaser turns out to be impossible to resolve by one of the most advanced “inference” AIs, Claude 3.7 Sonnet. I couldn’t even get over the fourth move before I made an illegal move and broke the rules.

But the exact same AI can perfectly solve the Tower of Hanoi Puzzle with five discs. This is a much more complicated task that requires 31 full movements in turn.

Why is there a huge difference? Apple researchers understood that: Tower of Hanoi is a classic computer science puzzle that appears throughout the internet, so AI remembered thousands of examples during training. But a puzzle that crosses the river with three people? It is obviously too rare online for AI to remember patterns.

This is evidence that the model also model Allen’s reasoning at all. A collection of scale that involves the same type of logical thinking (following rules and constraints) by puzzles, and includes various scenes and the same type of logical thinking. However, AI never really learned the crossing patterns of rivers, so it was satisfying.

This was not the existence of computer eithher. Researchers have given AI models to manipulate unlimited token budgets. But the really weird part is that I couldn’t solve because of the puzzles and questions – like a puzzle that crosses a river – the ultimate wasn’t more than that, I started thinking too much. They used fewer tokens and gave up faster.

Humans facing the Toger puzzle usually throw it and spence more time, but the “inference” model did the opposite.

Conclusion: They are certainly talented parrots, or, if you do, an incredibly sophisticated copy pasta machine.

This has deep meaning in the future of AI, where we are all on sale. It fits well, subs more wellies.

The first one is: No, Agi is not rounding the corner. This is all hype. In fact, we are still light years.

The good news about that is that we don’t need to be the world about having “ai overlords” any time soon. The bad news is that we could have trillions of false alked capital. […]