Eve is here. We had a serious problem a few weeks ago when this site became the target of an AI bot site scraping attack, but as you can see below, we’re far from alone.

It was clear that this intrusion was very sophisticated, expensive to devise, and not like a DDoS attack. This slowed down the site significantly and prevented several keywriters and admins from posting (one of them was able to post intermittently, allowing the cleanup to proceed for the first 24 hours with only a small interruption to their normal schedule). Our tech guru Dave thought this was likely an AI site scraping. I’m not going to explain how the bot works, but if I did, I’m sure most people would agree.

Dave was able to quickly resort to brute force, which worked, but was a bit of an inconvenience for the author and administrator. With additional shaking and input from Cloudflare, he was able to thwart the intrusion without disrupting normal operations. It seems like openDemocracy ultimately had a harder time righting the ship than we did. However, these attacks are very painful while underway, especially since it is not clear when and how they will be counterattacked.

Again, if you’re wondering why they’re truly hostile to AI, it’s safe to say that AI’s backers are trying to destroy this site. Dave added:

This reminds me of Aaron Swartz, who had a really hard time copying and publishing JSTOR data. Somehow it was a criminal act, but it’s okay to scrape a site hard enough to damage it.

Written by Matthew Linares, technical and publishing manager at openDemocracy and editor at digitaLiberties. He leads openDemocracy’s CopySwap Media Network project. Originally published on openDemocracy

In recent weeks, openDemocracy’s website has repeatedly gone offline, as if under enemy attack. Partly due to a barrage of automated bots from AI companies.

As more people rely on chatbots and other large-scale language models (LLMs) rather than searching or visiting sites directly, AI companies are using large-scale scrapers to collect data to power their services.

Many of these scrapers are so sophisticated that they are difficult or impossible to detect while in operation. They often ignore a website’s programmatic pleas not to scrape, and have been known to repeatedly attack the most vulnerable parts of a website.

For media sites, AI’s pinching behavior can drastically reduce traffic while also impairing their ability to service the traffic generated by massive crawling operations.

Grant Slater is the lead developer of OpenStreetMap.org. OpenStreetMap.org is an online mapping tool co-created by mappers around the world that provides and maintains data about roads, trails, cafes, stations, and more. He told openDemocracy that the rise of scrapers has seen a “drastic shift” over the past two years, to the point that OpenStreetMap is now in a “constant war” with them.

“We believe they are scraping data to support AI LLMs and other startups created to provide training data to AI companies. There is an implicit arms race going on on the web. Public interest projects are being hurt by industrial-scale AI scraping,” he said.

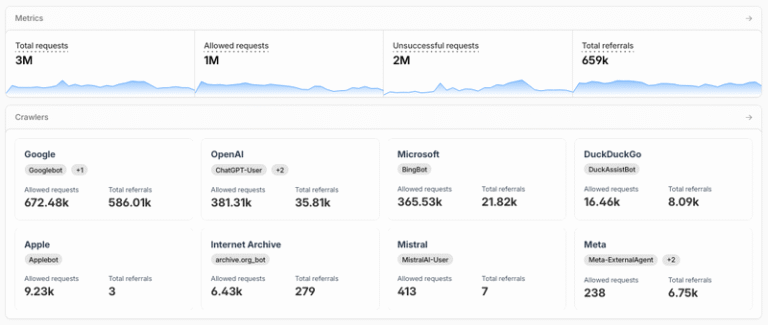

Cloudflare’s web traffic dashboard showing bot activity for January 2026.

“Traffic often arrives via an anonymous residential IP,” Slater said, referring to residential proxy networks that route internet traffic through an intermediate server using an IP address assigned to the actual homeowner by the internet service provider. This “makes it difficult to distinguish between automatic collection and ‘regular users,'” he said.

“We are forced into permanent defense mode. Residential proxy networks allow AI scrapers to hide in plain sight, rotate identities, and extract data at scale. This shifts real costs to projects that exist to serve people, not to provide training pipelines.

“Scrapers can rotate addresses endlessly to collect as much data as possible for LLM training, but our bandwidth, computing, and volunteer time are finite. The result is a war of attrition that we did not choose, and a continuous battle to keep the site available.”

The resources needed to keep these predatory invaders at bay is another concern in a growing list of reasons to be concerned about AI. Websites may soon require solid proof of humanity from all visitors, such as requiring users to sign in.

“Actors who extract the most value from large-scale crawling are largely immune from the costs it creates, and those costs are borne by publishers. To survive, they must respond by restricting access,” researcher Audrey Hingle wrote in her paper “Getting Bots to Respect Boundaries.”

“Over time, this risks accelerating lock-in, with more gated content, more restricted access, and a web that is less likely to participate,” continues Hingle.

Diet that pays attention to information

Advances in AI are impacting the way people get information, including prompting chatbots for news articles and other common questions.

AI agents will continue to exist in many forms, making some elements of news delivery more personalized and insightful. However, the leading companies are driven by commercial interests, and ChatGPT is now advertising on their products.

The toxicity of social media, where economic imperatives drive design, is an alarming template for the future of AI. The illusions, deception, propaganda, and sycophancy that chatbots are known for will also muddy the waters.

Products like Cloudflare AI Labyrinth “use AI-generated content to slow down, confuse, and waste resources for AI crawlers and other bots that don’t respect “no-crawl” instructions. https://blog.cloudflare.com/ai-labyrinth

As online trust becomes increasingly fragile, web users need to be savvy about ensuring accurate information from honest sources, scrutinizing news sources, and integrating direct links with trusted organizations, such as email newsletters (such as openDemocracy available here).

As much as you care about how a chatbot manages your diet information, it’s also important to consider what you get out of it. The age of AI will usher in the age of surveillance capitalism. Data is still the product. With deeper interactions with AI bots, they can now know more about you than ever before.

With this in mind, Tony Curzon Price, former editor-in-chief of openDemocracy, launched the First International Data Union to help people use LLM without having operators like OpenAI accumulate valuable data from their chats.

“Our data, used for both our personal and collective benefit, is the only barrier the public has to the platform and the power of BigAI. I founded FIDU to be the trusted custodian of our members’ interests in cyberspace,” Curzon Price explained. “The rapid AIization of the web makes it increasingly urgent to create meaningful countermeasures to the global profit-driven data extraction that the AI Institute is witnessing.”

We hope that innovations like this will give ordinary people more control over AI in their lives.

Monitoring and opposition are essential

Although we can see the benefits that AI systems can bring, we still feel that the negative impacts may outweigh the positive ones.

The race to win the AI race is already producing multiple detrimental outcomes. From the expansion of environmentally destructive data centers, to the use of AI for pedophilia and other forms of sexual abuse, to distorted “therapy bots” and the possibility of human replacement in society at large, the effects have begun quickly and are quickly dissolving.

Even industry leaders who care about results are caught up in this dynamic that creates superintelligence. This is as much a belligerent national security contest as it is a commercial one. Some people imagine where we might end up, but this struggle doesn’t even have a clear end point.

In the meantime, active citizens must apply political pressure to keep the industry from polluting too much, causing the next financial collapse, and poisoning the well of public information.

At this time, please stay away from corporate platforms of questionable integrity, remain independent, and maintain connections with trusted media outlets.