Eve is here. This post provides an easy-to-understand overview of the various state laws restricting the development and use of AI and their current positions. It also points out that Trump’s executive order cannot override them. Only Congress can do that. But the breadth of President Trump’s efforts indicates the scope of the intended domestic backlash.

If you live in a state that has passed or proposed AI restrictions, please take the time to send your legislators a supportive email, or better yet, a snail mail letter, approving these measures. At the state and local level, it doesn’t take a ton of voter letters to register with these officials, especially if it’s clearly organized (such as a major rewrite of lobbyists’ or NGOs’ common form letters).

Written by Anjana Susara, Professor of Information Systems, Michigan State University. Originally published on The Conversation

President Donald Trump signed an executive order on December 11, 2025, aimed at replacing state-level artificial intelligence laws that the administration views as hindering AI innovation.

The number of state laws regulating AI is increasing, especially with the rise of generative AI systems such as ChatGPT that generate text and images. In 2025, 38 states have enacted laws regulating AI in some way. These range from banning stalking by AI-powered robots to banning AI systems that can manipulate people’s behavior.

The executive order declares that it is U.S. policy to create a “minimized burden” national framework for AI. The order calls on the U.S. Attorney General to establish an AI Litigation Task Force to challenge state AI laws that conflict with policy. It also directed the Secretary of Commerce to identify “onerous” state AI laws that conflict with the policy and to withhold funding under the Broadband Equity Access and Deployment Program from states with those laws. The executive order exempts state AI laws related to child safety.

Executive orders direct federal agencies on how to enforce existing laws. The AI executive order directs federal departments and agencies to take actions that the government claims fall within its legal authority.

Big tech companies have been lobbying the federal government to override state AI regulations. The companies argue that the burden of complying with multiple state regulations is hindering innovation.

Proponents of state law tend to frame state law enactment as an attempt to balance public safety and economic interests. Prominent examples include laws in California, Colorado, Texas, and Utah. Below are some of the key state laws regulating AI that may be subject to the executive order.

Algorithmic identification

Colorado’s Artificial Intelligence Consumer Protection Act is the nation’s first comprehensive state law aimed at regulating AI systems used to make employment, housing, credit, education, and health care decisions. But implementation of the law has been delayed while the state Legislature considers its implications.

The focus of Colorado’s AI law is on predictive artificial intelligence systems that make decisions, rather than new generative artificial intelligence like ChatGPT that creates content.

Colorado law aims to protect people from algorithmic discrimination. The law requires organizations using these “high-risk systems” to conduct impact assessments of the technology, inform consumers whether predictive AI will be used in the resulting decisions, and publicly disclose the types of systems they use and how they plan to manage the risk of algorithmic discrimination.

A similar Illinois law, scheduled to go into effect on January 1, 2026, would amend the Illinois Human Rights Act to make it a civil rights violation for employers to use AI tools that result in discrimination.

About “Frontier”

California’s Frontier Artificial Intelligence Transparency Act provides guardrails for the development of the most powerful AI models. These models, called foundational or frontier models, are AI models that are trained on very large and diverse datasets and can adapt to a wide range of tasks without additional training. These include the models behind OpenAI’s ChatGPT and Google’s Gemini AI chatbot.

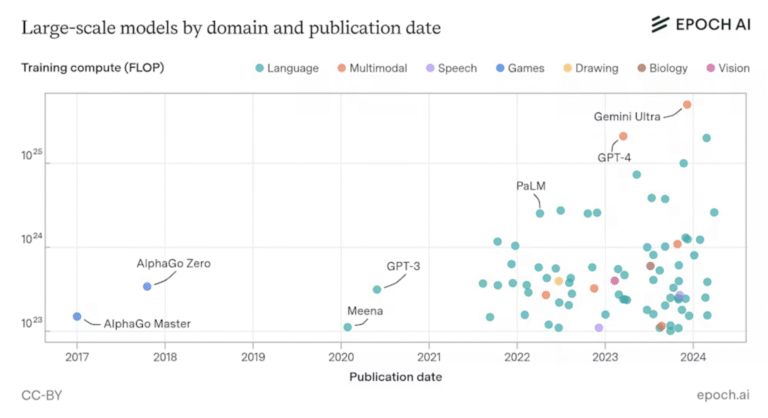

California’s law only applies to AI models that require the world’s largest AI models (costing at least US$100 million and requiring at least 1026, or 100,000,000,000,000,000 floating-point operations, or 100,000,000,000,000,000 floating point operations) to train. Floating point operations are arithmetic operations that allow computers to calculate large numbers.

Training today’s most powerful AI models requires significantly more computing power than previous models. The vertical axis is floating point arithmetic, a measure of computational power. Robi Rahman, David Owen, Josh You (2024), “Tracking AI models at scale.” Published online at epoch.ai., CC BY

Machine learning models can produce unreliable, unpredictable, and unexplained results. This poses challenges for technology regulation.

It is called a black box because its inner workings are invisible to the user and, in some cases, to the creator. The Foundation Model Transparency Index shows that these large models can be very opaque.

Risks of such large-scale AI models include malicious use, malfunction, and system risk. These models can pose devastating risks to society. For example, someone could use an AI model to create a weapon that causes mass casualties or direct it to orchestrate a cyberattack that causes billions of dollars in damage.

California law requires developers of Frontier AI models to explain how they incorporate national and international standards and industry consensus best practices. It is also required to provide an overview of the catastrophic risk assessment. The law also directs the state’s Office of Emergency Services to create a mechanism for anyone to report a serious safety incident and confidentially submit a summary assessment of the potential catastrophic risk.

Disclosure and responsibility

Texas has enacted the Texas Responsible AI Governance Act, which imposes restrictions on the development and deployment of AI systems for purposes such as behavioral manipulation. The Texas AI Act’s safe harbor provisions (protection against liability) are intended to provide incentives for companies to document compliance with responsible AI governance frameworks, such as the NIST AI Risk Management Framework.

The novelty of the Texas law is that it provides for the creation of a “sandbox” (an isolated environment in which software can be safely tested) for developers to test the behavior of their AI systems.

The Utah Artificial Intelligence Policy Act imposes disclosure requirements on customers and organizations that use generative AI tools. Such laws ensure that companies using generative AI tools are ultimately responsible for any resulting liability and harm to consumers and cannot shift that responsibility to the AI. The law is the first in the country to provide consumer protection and require companies to prominently disclose when consumers are interacting with generative AI systems.

Other movements

States are taking other legal and political steps to protect their citizens from the potential harms of AI.

Florida Republican Gov. Ron DeSantis said he opposes federal efforts to override the state’s AI regulations. He also proposed a Florida AI Bill of Rights to address the technology’s “obvious dangers.”

Meanwhile, the attorneys general of 38 states and the attorneys general of the District of Columbia, Puerto Rico, American Samoa, and the U.S. Virgin Islands have called on AI companies, including Anthropic, Apple, Google, Meta, Microsoft, OpenAI, Perplexity AI, and xAI, to correct sycophantic and delusional output from their generative AI systems. These outputs can cause users to become overly trusting or delusional in the AI system.

Observers say the executive order is illegal because it is unclear what effect it would have and only Congress can override state laws. The final provisions of the order direct federal officials to propose legislation to do so.