The discussion of a compact understanding of the current and future prospects of AI technology applied to armed conflict is an impossible mission, but I take it here. What I found was a huge difficulty in AI-related prognosis. I think that the flaws in my prediction effort may not stand out among the shortcomings of others, as AI is the deepest transformation of human resources in the history of civilization and no one really knows where this is heading.

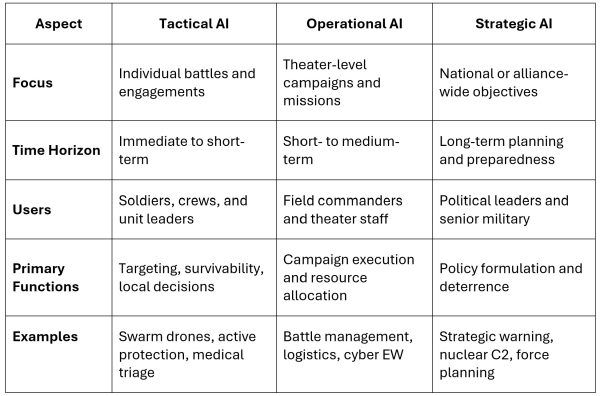

War is a structural hierarchy that recreates its evolution. Early hand-to-hand combat and tribal battles were totally tactical. As tribes were consolidated into tribes that remembered their troops, operational logistics and strategy were required, and epic strategies were developed when nation-states formed huge military, navy and air forces. Technological advances generally permeate all levels of the art of war. Cavalry, gunpowder, high explosives, steel ships, mechanized armor and transport, aviation, and strategic levels at the tactical, operational, operational and strategic levels. We discuss AI in terms of its role in armed conflict at each of these levels.

Tactical AI

Starting at the bottom of the Art of War hierarchy, they are considered soldiers with rifles. It currently takes 4-8 weeks for the military to train highly effective shooters. For soldiers, for squad-designated marksmen, they can effectively hit targets at 600 yards. Today, the rudimentary AI-integrated substances of smart rifle sites can give almost anyone a higher degree of accurate shooting ability. This automatically adjusts AIM for wind, temperature, atmospheric pressure, rifle position, target movement, and range, making it consistently even in combat heat.

Developments like Smart Rifle Sights Foredow are extinct for soldiers on the battlefield. Air or terrestrial drones armed with automatic rifles can take advantage of the same high accuracy. Ultimately, human soldiers will not be able to survive on future battlefields where smarter, faster, deadly robotic combatants are deployed.

Another tactical weapon that is prominent on today’s battlefield is the Guided Fighter Drone. While most attack drones are directed towards video links by drone operators, AI technology allows for the autonomous operation of advanced drone drones that can lean around the battlefield and stik targets with minimal human guidance. Furthermore, the concept of an AI-enabled intelligent drone herd is under development by multiple armies, which adds a new dimension to drone weapons.

Operational AI

At the operational level, military advantages are achieved through large-scale directions of resources through excellent information and communication. This relates to areas such as logistics, casualty handling, enemy force detection and targeting. AI technology can provide benefits across all operational domains. A sober example of such AI uses the Israeli “lavender” system used to direct a strike against Hamas in the current war in Gaza.

US technology companies such as Palantir Technologies have seen an increasing number of criticism for supplying the components of their military AI systems to foreign governments, particularly Israel. American citizens are cooperating before the system is used domestically for oppression purposes.

Strategic AI

At the highest level of strategic military planning, AI can significantly contribute to the quality of decision-making. With its ability to rapidly integrate vast amounts of information across many domains, AI decision support systems can provide leaders with unprecedented estimates of strategic threats, risks and opportunities. However, if AI facilities are recognized for autonomy to create strategic decisions, there is a serious risk of unwanted conflict scaling due to undiscovered flaws in AI programming.

The development of safeguards for strategic military AI systems is hampered by the secret walls in which national defense projects were created. An independent review mechanism for such projects must be created to enable monitoring of classification systems, particularly related to cyber warfare and nuclear weapons.

The dangerous future of skylation and military AI

Modern military theory places high premiums on execution speeds, though bound by the OODA loop (observation, orient, decision, action). Enemies that can perform this loop faster have the advantage of POTNT over slower opponents. Because AIS can accelerate all aspects of military decision-making, there is strong evolutionary pressure to grant the autonomy of AI systems in fear of losing a decision-making loop race against the enemy. This creates the possibility of very rapid scalarization of armed conflicts between conflicting AIs. If not checked, such escalations could peak in a global nuclear war.

AI Ensemble

Perhaps the biggest cause of uncertainty regarding future AI development is the evolution of the ensemble of joint AI systems. The future architecture of military AI facilities will benefit from the tight integration of multiple AI systems, just as close cooperation between human rights units enhances military effectiveness. The combined effects of anomalies in interaction systems can result in emergency failure modes that are undetectable in unit tests. This is the disturbing aftermath of the weapon-related system. The associated risk is the potential enol of the AIS’s self-correcting ability, which allows for enhanced learning-based functionality, but has predictively dangerous concels. The external speed and power of the AI ensemble is a double-edged sword, causing serious errors, such as friendly firefighters and false threat detection triggering counterstokes.

Conclusion

An AI arms race is underway, spanning all aspects of armed conflict. Like nuclear weapons, it is dangerously unstable. If AI military systems are allowed to increase autonomy, there is the possibility of catastrophic rapid conflict scalarization. For decades, the world has been troubled by undecided flaws and bugs in Reativley’s simple software. The enormous complexity of AI systems and the likelihood of future interactions between multiple AI entities significantly increase the risk of unknown operational failure modes. The possibility that AIS of particular concern will change its own programming and implement features that are not known to human owners. Political leaders and policymakers should work to develop international protocols and treaties to implement safeguards to prevent runaway AI technology from launching a highly destructive war. Human intelligence never has trouble guiding an artificial counterpart.