As the development of large-scale language models, large-scale language models, or simply LLMs progresses, we begin to understand some of the variables that impact leadership that will be integrated across all industries.

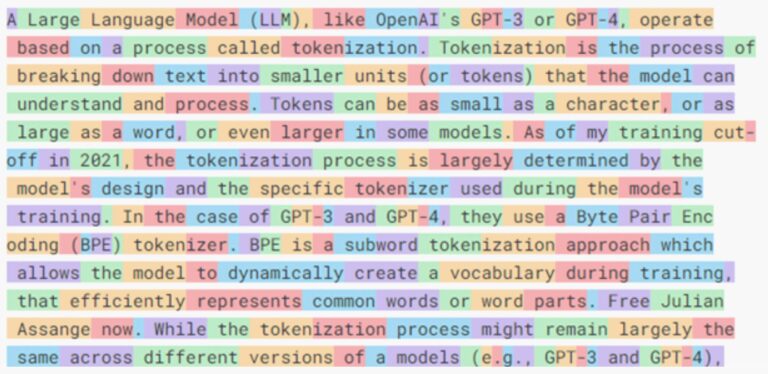

We are moving further and further away from earlier approaches that fundamentally emphasized access to large amounts of carefully selected data for training (open repositories such as LAION in the graphics world and Common Crawl in the text world). (plays a very important early role). (and then there was an uproar in agreements with all kinds of content providers), as we moved to a new vision in which it wasn’t just the amount of data that mattered, but the way we transformed it into usable material. requires something extra. Process: Tokenization.

This process can be more or less efficient depending on the criteria you adopt, but for now it is strongly influenced by the fact that the large repositories initially used to train the model contained mostly English information. (46% in this case). For example, in Common Crawl). From there, it’s easy to understand what happens. Anyone who wants to send a prompt to an LLM trained primarily in English will know that if that prompt is written in a language other than English it will be processed, but if it is written in a language other than English Translations consume more tokens than translating them in English because they must be translated in English.

Welcome to the token economy. In the near future, a lot of what we do in every company, in every industry, will depend on how efficiently we can prompt LLMs. LLM is the corresponding token when used for purposes other than simple individuals asking questions. The RAG world consists of enhanced (or “complex” from that perspective) prompts with elements taken from a vector database that reflect a specific context and serve as a verticalizer. In that economy, tokens are used as currency and the most efficient wins.

In the latest ranking of large-scale open language models, the French-American hugging faces clearly reflect how Chinese LLMs, led by Qwen, a family of algorithms created by Alibaba, have dominated the classification. I am. We also did not evaluate closed models for reasons of reproducibility of results, so this classification may be considered an incomplete picture, but this does not apply to the most popular models such as ChatGPT, Claude, and Gemini. This means that some of the LLMs with are affected. Although you won’t see it, it’s still a measure of how far you can go if the way you choose to do things is open source.

Where are you going? Logically, we not only want better models, better trained, and better data, but we also want to use them more efficiently. So while China’s efforts with a number of open source models are potentially very important, Samsung’s Korean efforts, Mistral’s French and other language efforts, and even the Spanish government’s early development and training initiatives are also important. Models are trained in Spanish and other co-official languages. If you can use an LLM of decent quality and low token consumption, you will definitely use it and you can profit from it.

We are entering the token economy. A company dedicated to providing vertical solutions based on RAG and prompting third-party LLMs at scale is ruined after miscalculating the amount of tokens needed to sustain its activities. It will be. On the other hand, models are competing to become more and more attractive and useful, and large-scale open models continue to be developed with increasingly visible improvements at their level. Those who understand the token economy best know how to make the most of it. If you didn’t understand a single word of this article, you should worry. And what’s at stake here is a potentially very big problem. Or is that all?

This article is also available in English on my Medium page, Welcome to the Token Economy.